What's new

Feb 17, 2025- In Dec 2024, we mistakenly reported incorrect scores for each solver. We sincerely apologize for this error. The overall winner remains unchanged, and the ranking adjustments are minimal. The updated results are find here.

- The program for the ICGCA symposium, where the results will be unveiled, is available here.

- ICGCA 2024 Symposium, where the results are revealed, will be held at Japanese Society for Artificial Intelligence on December 21pm, 2024 @ Keio University at Hiyoshi, Kanagawa, Japan.

- The submission deadlines are fixed:

- Benchmark submission (optional) is due September 10, 23:59 (UTC-11), 2024.

- Solver submission (mandatory) is due

September 17, 23:59 (UTC-11), 2024.September 24, 23:59 (UTC-11), 2024. - Paper submission (mandatory) is due October 15, 23:59 (UTC-11), 2024.

- Public benchmarks for directed graphs are available and registerer will be notified of download URL.

- Registration page is open. After registration, public benchmarks for undirected graphs can be downloaded. Benchmarks for directed graphs will be available soon.

- Schedule is updated. A FAQ (the top item) is added.

Overview

After the resounding success of ICGCA 2023, we are excited to announce the planning of ICGCA 2024.

The graph counting problem, i.e., counting all subgraphs that satisfy specified constraints for a given graph, is a key technique to address various social issues that can be abstracted as graphs. This problem has attracted attention in many applications, e.g., reliability evaluation and optimization of networked infrastructure, structural analysis of human society such as epidemiological studies and electoral districting, etc. You will find a useful and funny example on YouTube, created by a predecessor project of AFSA. As the YouTube movie shows, the graph counting problem is extremely challenging; e.g., even the small 10x10 grid graph has 1,568,758,030,464,750,013,214 paths!

In ICGCA 2023, contestants submitted their solvers counting paths on a given graph. Building on the strong foundation set by the previous year, ICGCA 2024 aims to explore new frontiers in graph counting algorithms. Here are some key changes and additions for 2024's competition:

- Expansion to Directed Graphs: While ICGCA 2023 focused exclusively on undirected graphs, ICGCA 2024 will encompass both undirected and directed graphs. By introducing a directed graph category, we anticipate the emergence of novel approaches distinct from last year.

- Distinction Between Parallel and Single-Thread Solvers: To facilitate a more nuanced comparison of algorithms, we will separately evaluate parallel and single-thread solvers. This separation allows contestants to demonstrate the efficiency and innovation of their algorithms in different computational contexts.

- Private Benchmark Instances: A portion of the benchmarks used for evaluation will be kept private. The introduction of private instances aims to prevent overfitting to specific sets of instances, encouraging the submission of more generally applicable algorithms. Furthermore, contestants are invited to contribute to the benchmark pool. While not mandatory, this opportunity enables contestants to enrich the diversity and complexity of the benchmarks.

ICGCA is held by the Algorithmic Foundations for Social Advancement (AFSA), one of the most significant research projects in computer science in Japan, to foster the foundation of computer science. The competition is held online in the following schedule.

- ICGCA 2024 web site is open in January, 2024.

- Public benchmarks will be available in May, 2024.

- Benchmark submission (optional) is due September 10, 23:59 (UTC-11), 2024.

- Solver submission (mandatory) is due

September 17, 23:59 (UTC-11), 2024.September 24, 23:59 (UTC-11), 2024. - Paper submission (mandatory) is due October 15, 23:59 (UTC-11), 2024.

- Results will be revealed on December 21pm, 2024 @ Keio University at Hiyoshi , Kanagawa, Japan.

The solvers will be ranked by accuracy, computation time, and/or ideas. Details of the competition are shown below. Note that the following are still under consideration and may be changed until April, 2024. We would appreciate your feedback to finalize the competition's details: see the organizers for the contact information.

Problem and benchmarks

The problem is counting all paths that satisfy given constraints on a given graph (the above movie shows some instances of this problem). This problem is known to be in #P-complete, a computationally challenging class.

Input

Each benchmark (an instance) consists of the following input.- An undirected or directed graph,

- Path terminals, and

- The maximum path length (the maximum number of edges on the path).

Output

- The number of simple paths between the terminals under the path length constraint.

- Paths are distinguished just by their node sequences, even if the graph has multiple-edges/arcs or self-loops. Note that the path length is defined by the number of edges (or equally the number of nodes minus one).

- Zero-length paths are not counted even if the terminals are identical.

Example benchmarks

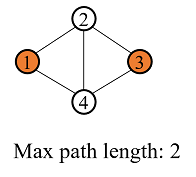

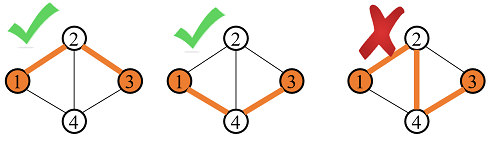

- The input includes a graph with a terminal pair (vertices 1 and 3) and the maximum path length of two.

- The output should be "2"; there are two paths between the terminal pair with at most two edges, as follows.

Example code for undirected graphs

Although most contestants are likely to use low-level programming languages like C/C++, we, for clarity, show a Python example using Graphillion, a graph library featuring graph enumeration algorithms.

# Input

E = [(1, 2), (1, 4), (2, 3), (2, 4), (3, 4)] # An undirected graph

L = 2 # The maximum path length

T = (1, 3) # The path terminals

# Initialization

from graphillion import GraphSet

GraphSet.set_universe(E)

# Count the paths

n = len(GraphSet.paths(*T).smaller(L + 1))

# Output

print(n) # 2 pathsNumber of benchmarks

In the competition, 100 benchmarks are given for each category: 50 public instances and 50 private instances. The benchmarks are prepared separately for undirected and directed graphs, but shared among single-thread and multi-thread categories.

How to download public benchmarks

Contestants are supposed to register to download public benchmarks.Categories

ICGCA 2024 holds the following four categories.- Single-thread solvers for undirected graphs.

- Multi-thread solvers for undirected graphs.

- Single-thread solvers for directed graphs.

- Multi-thread solvers for directed graphs.

Rules

Contestants

Contestants can be individuals or teams. A team can submit one solver for each category. Note that a person can belong to at most two teams.

Evaluation metrics

- The number of benchmarks correctly solved in a time budget.

- The time budget is 600 seconds in wall-clock time for each benchmark.

- Submitted solvers are supposed to be executed on an organizers' computer described in the evaluation environment.

- Solvers that gave some benchmarks wrong answers are disqualified. Note that since path-counting problems are difficult to verify the correctness of the answers, this rule would only apply to relatively small benchmarks that are easily solvable by the organizers.

- An idea inspiring algorithms.

- Algorithmic ideas of the solver are evaluated by contestants' votes. Contestants will be personally notified on how to vote.

Input format

A benchmark is given in an extended DIMACS file format. Each line begins with a letter that defines the rest of the line. The legal lines are:

- c Comment: the remainder of the line is ignored.

- p Problem: must be of form p edge n m where n is the number of nodes (to be numbered 1..n) and m the number of edges.

- e Edge: must be of form e v1 v2 where v1 and v2 are the endpoints of the edge. The edge is from v1 to v2 when directed.

- l Length: must be of form l len where len is the maximum path length (the number of edges on the path).

- t Terminals: must be of form t v1 v2 where v1 and v2 are the path terminals.

The following is the input file of the above example benchmark.

c This is an example.

p edge 4 5

e 1 2

e 1 4

e 2 3

e 2 4

e 3 4

l 2

t 1 3For directed graphs, edge e v1 v2 lines are replaced with arch a v1 v2, which means the arc from v1 to v2.

Output format

Path-counting solvers are supposed to output just a single integer of path count to stdout.Specifications of the submitted solver

Path-counting solvers must be compiled with make, and the executable file must be named a.out. The organizers will run the solver by ./a.out < <benchmark-file> and examine the output number as the path count. To recap, what the organizers will do are as follows.

$ make

$ ./a.out < <benchmark-file> # Run for each benchmark

??? # Interpret the output number as the path countEvaluation environment

Submitted solvers are supposed to be executed on the following environment:- Hardware: Intel NUC 12 Pro Kit NUC12WSHi7

- 12 CPU cores, no GPU, 64 GB main memory , and 2 TB SSD.

- Software: Ubuntu server 22.04 LTS with the following development packages

- apt-get install build-essential rust-all libboost-dev python3-pip cmake openmpi-bin libopenblas-dev libx11-dev default-jdk

- pip install docker psutil

- Docker installed following the official docker page.

If you have any requests on the evaluation environment, e.g., installation of other packages, please send the requests to the organizers.

Evaluation script

Your solver will be evaluated by this Python script. You can use it for pre-checking your solver if you wish. To run the script, follow the instructions below:

python icgca-evaluation.py -u {user} -o {output} -bms {benchmark_dir}

Please ensure that the Makefile and a.out files are in the directory /home/icgca/{user}/submission/. Additionally, store the ICGCA benchmarks (*.col) in the directory of {benckmark_dir} (undirected or directed). The script will save the results to {output}/{user}-results.csv.

Kindly note that this script is designed for our evaluation purposes and may not be compatible with other environments. You can freely modify the script to make it work in your environment. Keep in mind that the execution of this script is solely at your own risk and responsibility.

If you encounter any critical issues that may affect the evaluation's accuracy, please inform us promptly.

Registration

Contestants need to register for the competition to download the public benchmarks. A team at the registration is tentative, and it will be finalized at the submission.

The registration page is here.

Submission

To proceed to the submission process, contestants need to register in advance. Registered contestants will be notified of a personalized submission page via email around July. Don't hesitate to contact the organizers if you have not received the notification email. Please follow the specific instructions provided in the email to complete your submission.

Submission files

Contestants are supposed to submit their solver with source codes and a short paper. Contestants also can submit instances, which may be selected as benchmarks for the evaluation.

- Solver

- Solvers should be submitted separately for each category; if a team wants to participate in all four categories, they need to submit four solvers (their implementation can be different).

- The solver is submitted in either of the following formats:

- Makefile with source codes, or

- Statically linked binary with source codes used to compile.

- The solver must satisfy the specifications of the submitted solver, i.e., it can be built with make command and executed with ./a.out < <benchmark-file>.

- To follow the specification, refer to the following samples:

- Normal Makefile. Makefile is used to build a.out.

- CMake. Makefile is used to trigger cmake and move build/a.out to the current directory.

- Docker. Makefile is used to trigger docker built, whereas a.out of a shell script is prepared to call docker container run.

- All the submitted source codes will be made available online on the competition site after the submission due.

- Submitted solvers must follow an open source license (e.g. Apache2, MIT, or public domain) and must contain a LICENSE.md or LICENSE.txt file at the root.

- Do not hard-code the benchmark solutions in your solver!

- Short paper

- A single paper is submitted from each team, regardless of participation categories.

- The paper should briefly describe your solver's algorithmic ideas.

- The paper can be in any format, e.g., a one-page extended abstract.

- The paper must include the team name and team members.

- Instances (optional)

- Each team can submit at most ten instances of undirected graphs and/or ten instances of directed graphs.

- The instances must follow the specified format. It is strongly recommended to write the origin of the graph in the comment line.

- The organizers will select instances used in the evaluation depending on the hardness. The selected instances will be revealed after the competition, as all the benchmarks will be available.

Event

Although the competition is held online, the results will be revealed at Japanese Society for Artificial Intelligence on December 21, 2024. Anyone including but not limited to contestants is welcome to join the event.

Past events

Organizers

Members:- Takeru Inoue (NTT, Japan)

- Norihito Yasuda (NTT, Japan)

- Hidetomo Nabeshima (University of Yamanashi, Japan)

- Masaaki Nishino (NTT, Japan)

- Shuhei Denzumi (NTT, Japan)

- Shin-ichi Minato (Kyoto University, Japan)

Support: KAKENHI Grant-in-Aid for Transformative Research Areas (A) "Algorithmic Foundations for Social Advancement"

Contact: icgca-contact [at] afsa.jp

FAQ

- Q. Can I use fixed-width integers?

- A. Okay, but your solver must gracefully exit when an overflow occurs; if your solver does not account for overflows and returns incorrect results, it may be disqualified.

- Q. Should zero-length paths be counted when the terminals are identical?

- A. Zero-length paths are not counted even if the terminals are identical.

- Q. Could you show the size of the maximum instance in private benchmarks?

- A. The maximum sizes in the current public and private benchmarks are as follows.

- Undirected graphs:

- Max number of vertices: 2000

- Max number of edges: 5298

- Directed graphs:

- Max number of vertices: 3774768

- Max number of edges: 16518948

- Q. Will solvers run on a P-core in the single-thread track?

- A. Yes. A P-core is allocated to the solver using taskset command.

- Is it okay to find only simple paths?

- A. Yes. Paths are distinguished just by their node sequences, even if the graph has multiple-edges/arcs or self-loops.

- Q. Summarize the differences from ICGCA2023.

- A. The following are the differences:

- Tracks: Digraph and single-thread are added.

- Benchmarks: Private ones are introduced. Contestants can submit benchmarks. Benchmarks for all-pairs (PCA) have been discontinued.

- Solvers: Solvers that output even one wrong solution will be disqualified. Submitted codes cannot include operations that require root privileges.

- Q. Is the PCA (path counting for all vertex pairs) problem missed in ICGCA 2024?

- A. Yes. ICGCA 2024 only provides the PCS (path counting for a single vertex pair) problem.

- Q. Will other processes run in the evaluation environment?

- A. Evaluation will be performed in the Ubuntu server in the initial state with apt-get as described above. Each submitted solver will run exclusively.

- Q. Under what temperature will the evaluation be performed?

- A. Air-conditioning will be appropriately used to avoid unfairness among contestants.

- Q. What is the maximum benchmark size in the evaluation?

- A. Although it depends on instances to be submitted from contestants, we assume that the maximum number of edges is less than 10,000.

- Q. Can the number of pre-counted paths be hard-coded or downloaded from the network?

- No. Submitted solvers cannot include the number of paths or other information used for each benchmark. Such solvers will be disqualified.

- Q. Can contestants check the compilation result in the evaluation environment beforehand?

- A. Yes. Feel free to get in touch with the organizers.

- Q. Does "open source" require the disclaimer of intellectual property rights?

- A. No, contestants are not required to give up their intellectual property rights. However, we would appreciate it if academic use is free of charge.

- Q. A contestant wants to use code violating nondisclosure agreements.

- A. Submitted solvers must not contain any rights issues. Please get in touch with the organizers if you have complicated matters.